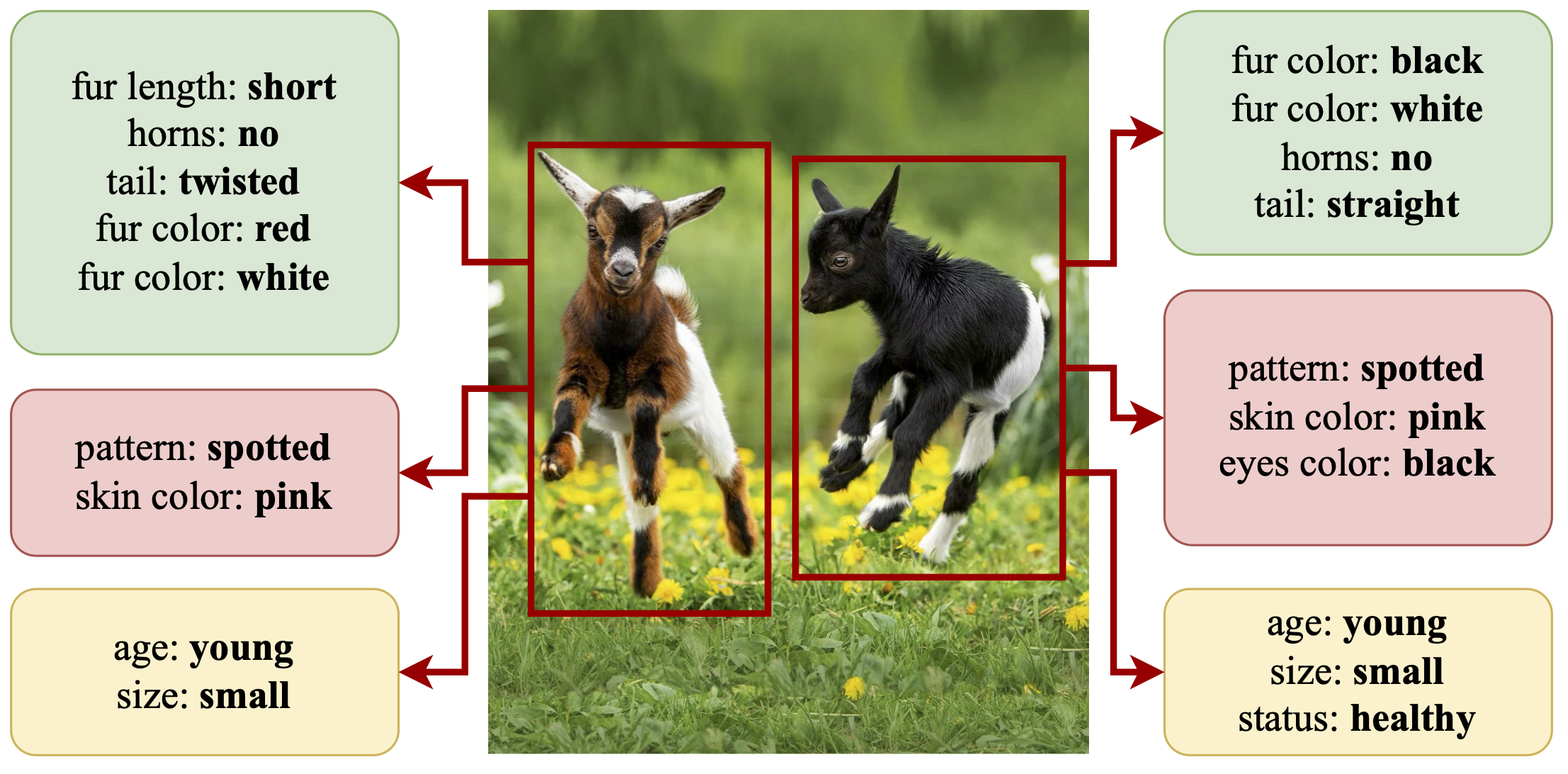

Attribute detection is crucial for many computer vision tasks, as it enables systems to

describe properties such as color, texture, and material.

Current approaches often rely on labor-intensive annotation processes which are inherently

limited: objects can be described at an arbitrary level of detail (e.g., color vs.

color shades), leading to ambiguities when the annotators are not instructed carefully.

Furthermore, they operate within a predefined set of attributes, reducing scalability and

adaptability to unforeseen downstream applications.

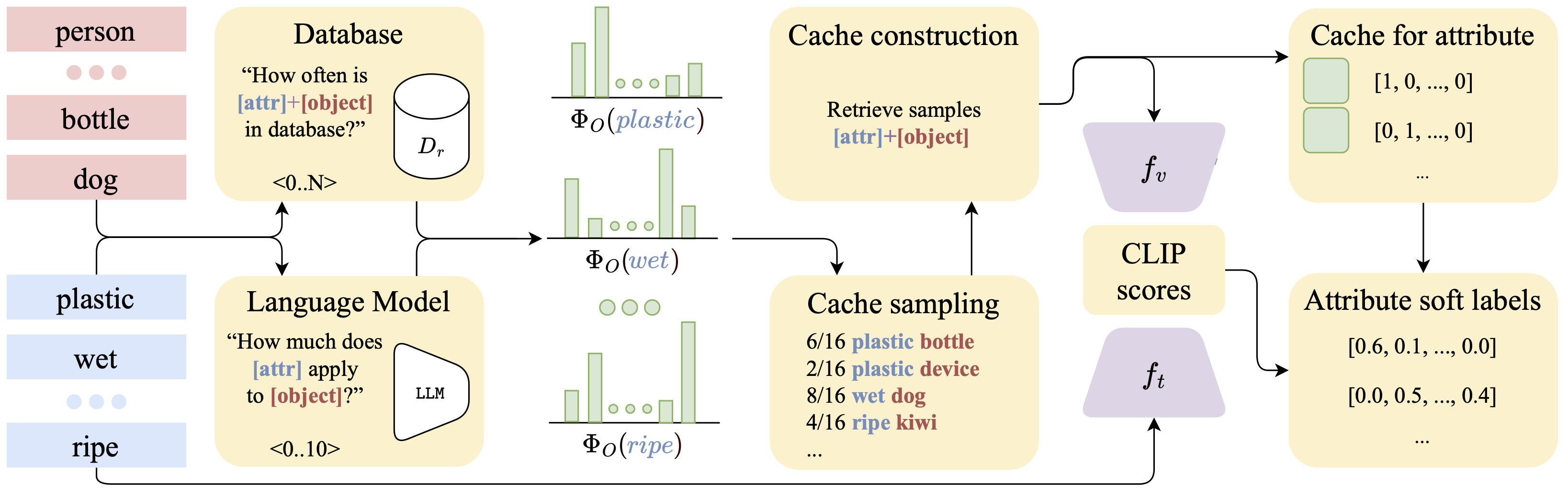

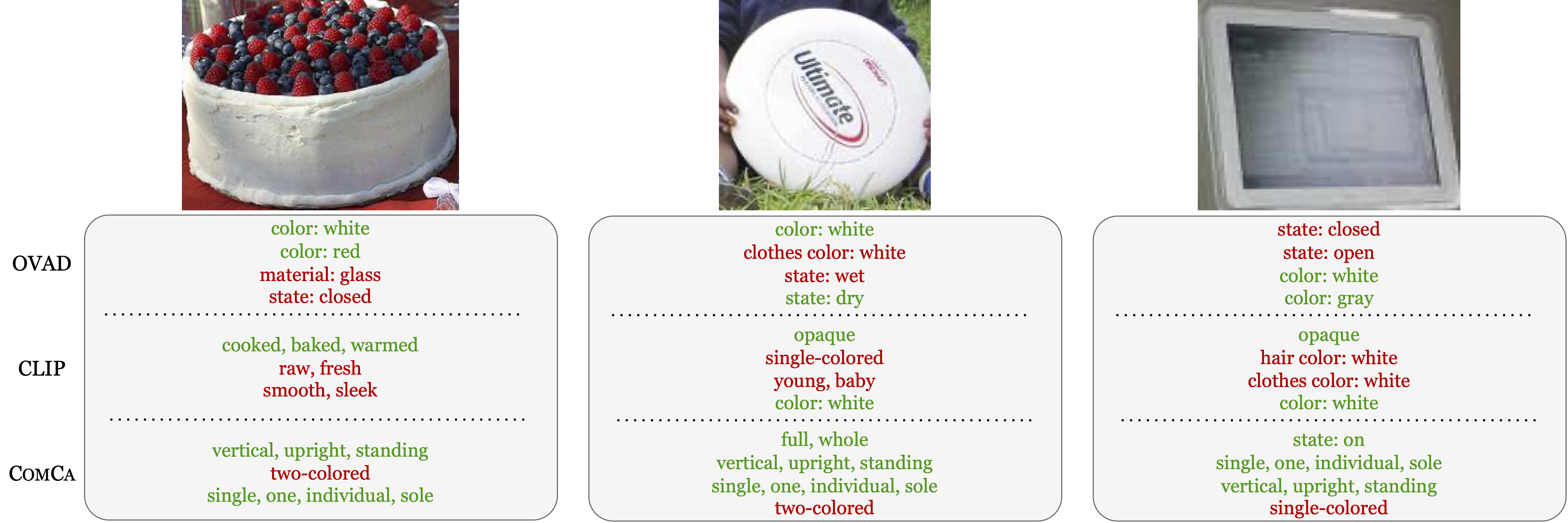

We present Compositional Caching (ComCa), a training-free method for

open-vocabulary attribute detection that overcomes these constraints.

ComCa requires only the list of target attributes and objects as input, using them to

populate an auxiliary cache of images by leveraging web-scale databases and Large Language

Models to determine attribute-object compatibility.

To account for the compositional nature of attributes, cache images receive soft attribute

labels.

Those are aggregated at inference time based on the similarity between the input and cache

images, refining the predictions of underlying Vision-Language Models (VLMs).

Importantly, our approach is model-agnostic, compatible with various VLMs.

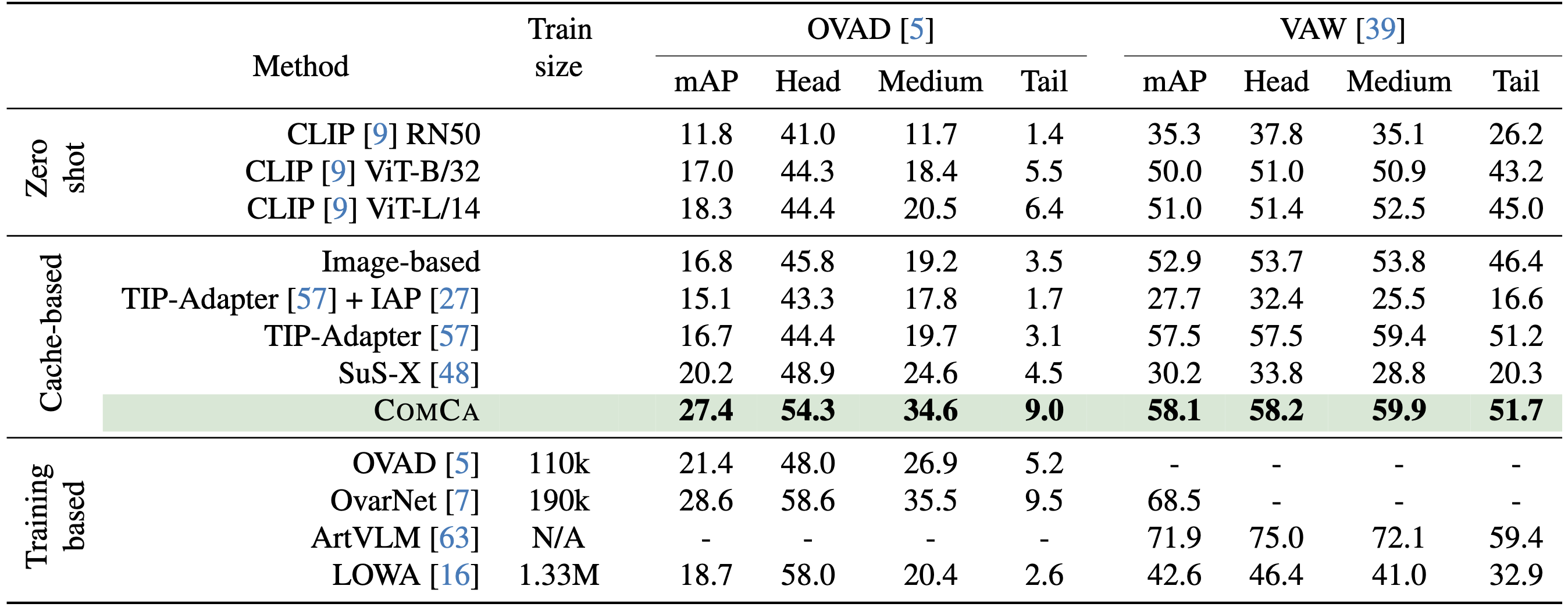

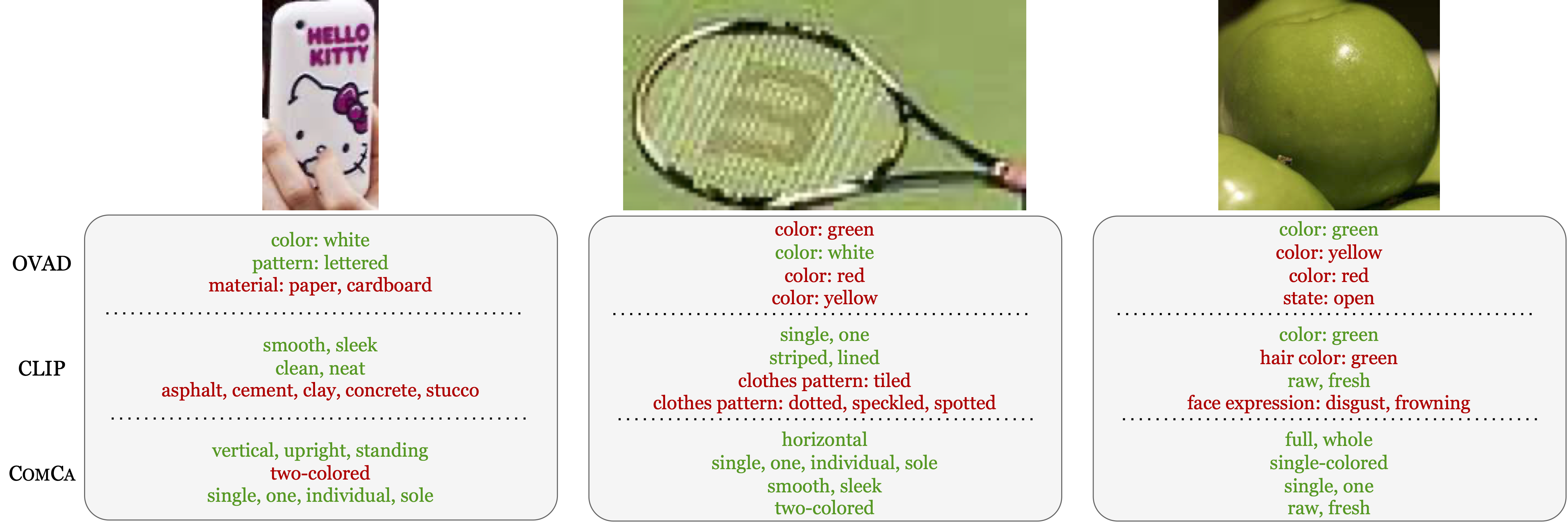

Experiments on public datasets demonstrate that ComCa significantly outperforms zero-shot

and cache-based baselines, competing with recent training-based methods, proving that a

carefully designed training-free approach can successfully address open-vocabulary attribute

detection.